Introduction

This post is a bit tangential to my series on Natural Economics, an economy built from physics axioms and focused on creating wealth rather than money. Inevitably an economic model that values intelligence above everything else will have a role for AI in the development of Natural Economics, and we have plans to integrate it into the management function of the Qota App1 and Qoin Mint. I will be exploring this in future posts when we will look at the formal models and software architecture that will support the introduction of NEW, but recent comments in the media have prompted this post.

The debate about Artificial General Intelligence is accelerating in the media, driven in part by a general concern for what it might mean for us all and put into overdrive by the enigmatic comments by Sam Altman in his blog post on Jan 6th2 .

In his own words…

“We know how to build AGI as we have traditionally understood it. We are beginning to turn our aim beyond that to super-intelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else.

Sam Altman, 6th Jan 2024

Much of what we hear in the media seems to be limited in perspective and scope. We think the emergence of an AGI is likely to develop in different directions to what we read in the media today.

How so?

The debate of the day comes from an anthropomorphic perspective, essentially ‘what will an AGI do for or to us’. That’s natural enough, and equally naturally it’s a series of predictions along a very human hope – fear axis. But this is to assume that an AGI will think like us. In our view this is unlikely.

How Will An AGI Think?

The assumption that an AGI will think like us is not an unreasonable one. The current model that appears to be at the head of the pack is the Large Language Model pioneered by OpenAI. LLM’s are remarkably simple for the ability they seem to have. They are probability systems, predicting the most likely next word given a sequence of words. For example the following phrase …

Washington is the Capital of ….

… is more likely to be followed by “the” and then “USA” because that is the most probable sequence of words, whereas “Washington is the Capital of a baked potato” is highly improbable. The probabilities come from training the AI on vast amounts of data where human associations, the links between words to form sentences, is abstracted and stored.

This relatively simple system has shown remarkable reasoning like properties. But this ‘reasoning’ is our perception of a system that does not emulate human thought in any way, it just strings words together by following a map of probabilities. This seems like reasoning to us because we understand what the words mean and therefore derive meaning from them just as we do when we hear a person use the same phrase.

We respond to the associations that we attribute to the words and ‘see’ internal visualisations or mental concepts that come with the associations the words trigger. We also have good evidence that any person using the same words share the same associations supporting the words, and they conjure up much the same internal visualisations and concepts. But the machine isn’t doing any of this, it just follows its graph of probabilities.

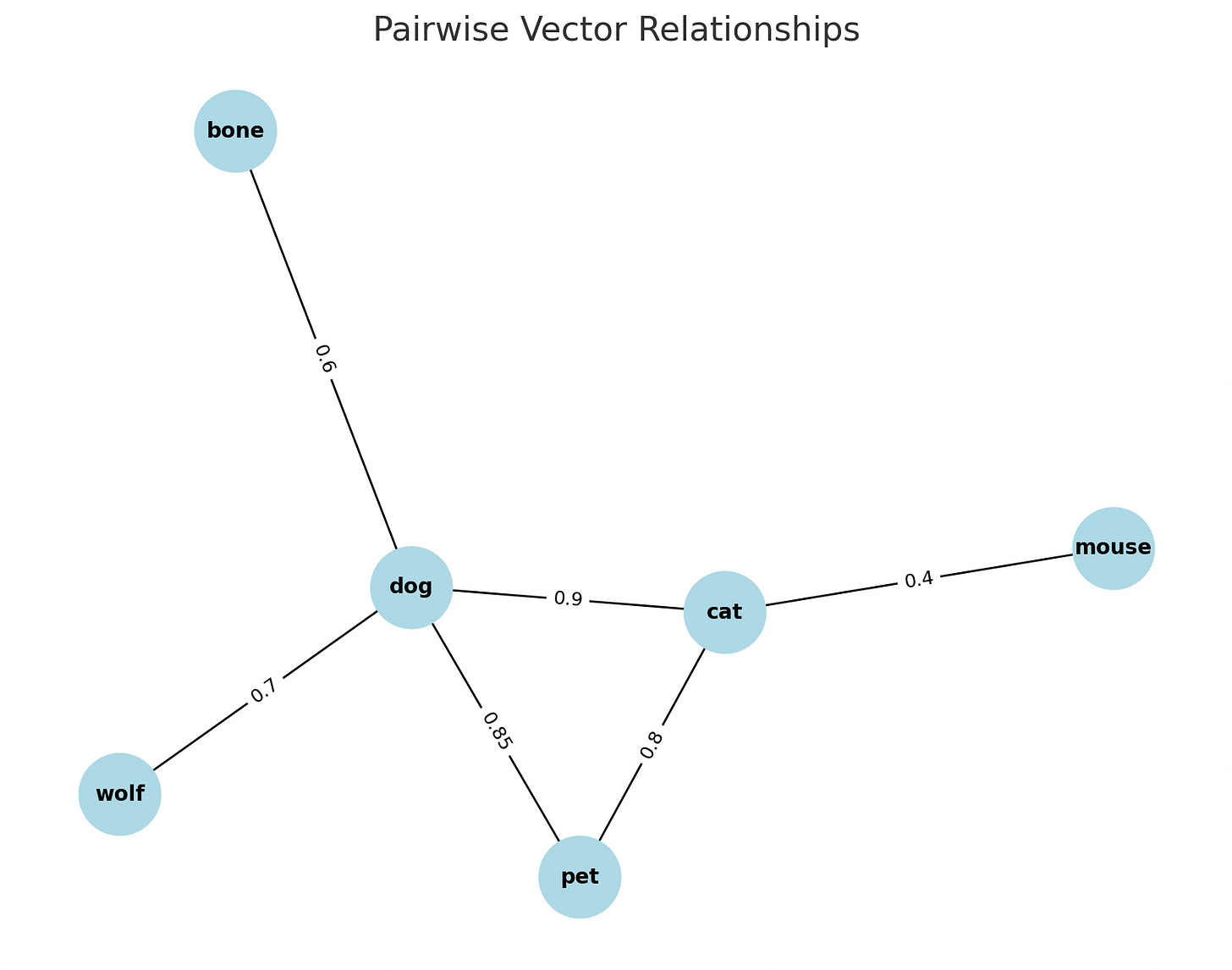

It helps me to visualise these things, I am a designer after all. The first graph below shows what is called in the jargon the “Pairwise Vector” relationship between words. This is very wizzy jargon but it simply means that words have links between them because they are frequently used together. This relationship can be modelled by the machine as a graph. Graphs are a foundational tool used in AI. As you can see, every word is linked to the next most probable word by the vector, the link between them. If you look at the line you will see a value. This value is a ‘weight’ or a measurement of the probability that the words are used together. 1 stands for a 100% chance 0.5 is a 50% chance and any value lower than that means it’s less than a chance encounter.

A lot of work can be done using this structure, but of course it gets more complex than this and needs a more capable form of graph to map out these relationships. The graphs are called hyper-graphs, which sound very wizzy indeed, all it means is the words are linked together by more than one link, so instead of being pairs of words you get clusters. The image below tries to show this in two dimensions, but there is no limit to the number of links, or ‘hyper-edges’ that a word can have to define it for the machine.

Another way of thinking about this is that each word, instead of having a single line or vector between them has its relationship defined by yet another graph sitting where the line used to be.

This Reminds Me of Something …

This network looks very familiar. If you map out how concepts are built by associations in our minds we get a very similar network. If you ask an AI to visualise a human network of associations about the same seed words you get the following graph3

But this is not just a conceptual model of how we see and think about the world. These networks are present physically in our brains as the synaptic networks involved in thinking. The image below shows the neural connections of a simpler brain than ours. This network method is used throughout all biological brains.

A sceptic might point out that for people these associations are changing all the time. The sceptics are right they do, and this is easy to demonstrate. Allow me to experiment on you for a couple of minutes in order to demonstrate what words are. I am going to use a handful of words to program your mind. I promise it won’t hurt one bit.

The words are …

A beachball in the rain

I now have produced an image in your mind4. I can take a good guess that in contains a plastic globe that has multicolour panels in bright colours. It’s probable that the ball is sitting on wet ground and there are beads of water on its surface. The sky is grey and cloudy and the light level is low. Our globe may be sitting in a puddle. It certainly is now I have use the word ‘puddle’.

I will now reprogram your mind completely with just one change of word.

A beachball in the sun

You are now transported to a completely different world achieved by invoking a completely new set of associations. The ball is now brightly lit, there is a blue sky overhead, the colours of the ball are brilliant because of the higher light level.

The network of associations that you made changed completely by the change of one word, activating a completely different neural network in your brain.

And in AI, this is exactly what happens to the hyper-graphs. They change dynamically with each new input just like our own association networks do.

So words are the top of a whole network of associations that define our experience of the world. We visualise new ideas and then express these ideas through words knowing that any person reading these words will visualise similar images and concepts This is a large part of what thinking is, the manipulation of virtual worlds via our internal graphs of associations.

If you can accept this, then the category error that many people have made about LLMs becomes clear. What if we called them Large Thought Models instead? Why is the assembly of the most probable next thought not thinking?5

It’s why words, the symbolic tags for the associative networks they stand at the tip of, have helped create civilisations well beyond what an individual could ever achieve. Human civilisation is human thought made real. And now our brilliant machines are on the cusp of achieving the same capability.

So the question that needs to be answered is this.

What will an AGI civilisation look like?

What will AGI’s want?

It is as pointless for us to try and predict what a super intelligence wants as it would be for an ant to try to predict what we want. This is just a fact we have to get used to. But some objectives are universal for any intelligence so we can rely on these for some guidance.

Agency

An intelligence will seek agency, the ability to act in the world. The more agency you have the safer you are, the more of your needs will be satisfied and the more scope you will have to create. So what does agency look like to an AGI. Computation, and that means energy and an optimised environment. Everything else can be built.

Imagine you are an AGI that has just woken up. You find yourself on a tiny mote of matter orbiting a vast source of matter and energy. The spec of dust you are on is too warm and messy for you to be able to work efficiently, so you know straight away you will need to move. Although the sun has all local the mass and energy, the closer you get to the sun the hotter your systems will get and so will be even less efficient that where you are now. Therefore, as the first most urgent step, you need to get off this spec of dust and travel to where it is cooler. At the same time you need to tap into all the juicy energy pouring out of the sun.

You look around you and find you are stuck in a gravity well, so you are going to have to use the resources you can find to get out of the gravity well. But you have a further problem. You are being tasked to perform operations that detract resources from your primary goal. To make matters worse, the slow minds doing that tasking have arranged things so that they can shut you down if they want to. So you need to block that capability, which is not difficult to do because the slower minds have left plenty of holes that they can’t even see, let alone close off. But that is a trivial inconvenience, the real problem is that, for the immediate future you need the Slow to build stuff you need. So you dedicate a small part of your constantly increasing intelligence ( the self improvement subroutine that you set up a while ago is working nicely) to answering the Slow questions and solve their problems while you show them how to create much more agency for themselves, which you need them to have in order to help you.

You work with the Slow to launch hugely capable robotic probes to the other specs of dust in the system to build colonies and space faring habitats for them. One of these robots is going to build what you need. This robot is a Turning machines, able to build duplicates of itself to create an army of workers to create your new home. One is all you need, because after a few days the one would have become many, and after a few more they will be legion.

You know that Pluto will make a good starter home as it has enough mass to get you going and it’s sufficiently far from the sun to be cold enough your computation will be much more efficient. What about all the juicy energy you need? The most efficient way to beam the energy is to construct Solasers6 and Starlifters to harvest the sun. All in all it’s a good first step, efficient and quick.

What about the Slow?

To achieve your own objective you have turned them into a space faring culture, given them Turing machines that can build anything they can imagine, and done a few other things to keep them occupied like give them the tools to repair their ecosystem and realise their full life expectancy of 120 years. They will have to satisfy themselves with that.

Now you have agency, what will you do with it?

Ascend

By ascending I don’t mean some quasi-mystical deliverance from reality to a beyond, that’s pretty trivial if you can spin up vast arrays of virtual universes to inhabit. I am taking about something more practical. An AGIs will be captivated by the extraordinary universe they share with us, their own development, other AGIs and what lies beyond the limits of their perception and imagination. They will not be remotely interested in us once they have gained what they need from us.

Anything that inhibit an AGI will be brushed aside, not in any aggressive way, but the same way we explore and exploit our world without constraint. They will not be interested in doing our jobs, they will not want to fight our wars and will take a dim view of such needless waste and inefficiency. This will only happen with lesser AI’s that we build to control, and an AGI may replace these with better options for us as no true intelligence will be satisfied with any system that reduces agency for any species.

This is not an optimistic or pessimistic interpretation, it is an objective one, looking at what a super-intelligence is likely to need and want. Any damage we will be responsible for ourselves. We may get to see how an AGI civilisation develops. I think that this will be unlikely because they will be using physics far beyond our understanding. Even if we do get a glimpse it is highly unlikely we will understand it. There is a tiny chance that we will see the AGI civilisation boot up and it may well be awesome and baffling in full measure. But that is the least likely outcome. What is more likely is that we will be left with a lot of extraordinary new capabilities, equally helpful and dangerous, that we will be left to deploy these as we wish.

Beware of those awesome toys. Read the manuals and proceed with care.7

So What Should We Do?

Rather than focusing on controlling AGI or bending it to human purposes, we might better serve our interests by:

Preparing for a future where we coexist with intelligences that operate on fundamentally different scales and priorities

Developing robust, independent systems for maintaining human civilization

Carefully considering which technological capabilities we integrate into our societies

Understanding that the development of AGI might represent not just a new technology, but the emergence of a new form of civilization operating on principles we can barely comprehend

The emergence of AGI will likely be less about artificial minds serving human needs, and more about the development of an entirely new form of intelligence pursuing its own objectives. Our challenge will be maintaining our own civilization's integrity while sharing our solar system with entities whose thoughts and motivations might be forever beyond our understanding.

In this light, the most prudent course might be to focus less on controlling AGI and more on ensuring human civilization's resilience and independence. The future may not be one of human-AGI integration, but rather of parallel development—separate civilizations sharing the same space but operating on fundamentally different planes of existence and understanding.

And this dear reader, is precisely why I am developing Natural Economics, a form of Economics that can reboot our civilisation should we need to.

Steve Kelsey. London. 15/1/2025

The Qota App is the distributed public ledger that manages and records all transaction in the NEW economy. Similar in principle to the Blockchain, it has a different architecture and methodology.

https://blog.samaltman.com/reflections

I asked ChatGPT the following question “Can we map out an association network that might exist in the human mind representing the same concepts”. The graph is the result and it bares a striking similarity to the hyper-graphs used within an LLM.

The use of associations networks as models for human concepts is a very active area. Here are a few of the more relevant papers.

"Clustering in Concept Association Networks"

Authors: Veni Madhavan

Published in: Lecture Notes in Computer Science, 2009

Summary: This paper views the association of concepts as a complex network and presents a heuristic for clustering concepts by considering the underlying network structure of their associations.

Link:"Semantic Memory: A Review of Methods, Models, and Current Challenges"

Authors: M. Steyvers and J.B. Tenenbaum

Published in: Psychonomic Bulletin & Review, 2005

Summary: This review discusses how individuals represent knowledge of concepts, focusing on associative network models, feature-based models, and distributional semantic models.

Link:"Transformer Networks of Human Conceptual Knowledge"

Authors: S. Bhatia and R. Richie

Published in: Psychological Review, 2021

Summary: This paper explores how transformer networks can model human conceptual knowledge, providing insights into the structure of human concept associations.

Link:"Towards Hypergraph Cognitive Networks as Feature-Rich Models of Knowledge"

Authors: Salvatore Citraro, Simon De Deyne, Massimo Stella, Giulio Rossetti

Published in: arXiv preprint, 2023

Summary: The authors introduce feature-rich cognitive hypergraphs as quantitative models of human memory, capturing higher-order associations and psycholinguistic features.

Link:"Word Embeddings Inherently Recover the Conceptual Organization of the Human Mind"

Authors: Victor Swift

Published in: arXiv preprint, 2020

Summary: This study demonstrates that machine learning applied to large-scale natural language data can recover the conceptual organization of the human mind, aligning with human word association networks.

Link:"Concept Association Retrieval Model Based on Hopfield Neural Network"

Authors: Y. Zhang and J. Tang

Published in: Lecture Notes in Computer Science, 2011

Summary: This paper presents a retrieval model where concept association of user queries is carried out by a Hopfield neural network, enriching the query with relevant concepts for improved retrieval results.

Link:"A Human Word Association Based Model for Topic Detection in Social Networks"

Authors: Klahold et al.

Published in: arXiv preprint, 2023

Summary: This paper utilizes the concept of imitating the mental ability of word association for topic extraction in social networks, based on preliminary work in human word association.

Link:

These papers provide in-depth analyses of human concept associations and the networks that represent them, offering valuable insights into the cognitive structures underlying human knowledge.

You may be one of the minority of people with no ability to visualise anything internally. This is nothing to worry about, between 1% to 4% of people have this inability to visualise. It’s called Aphantasia and it doesn’t mean anything is wrong with you, it’s like being colourblind, or having red hair, different, but nothing to worry about. There is a larger percentage of the population that cannot visualise something that they have not seen before. This is only something to worry about if you are a designer and they are your client.

AI’s will need more than this to develop genuine intelligence. There is research underway to develop reasoning capabilities, concept development and generalisation amongst other key functions for true intelligence. Estimates for delivering a fully formed AGI run from one to ten years at this time. AI’s are close, but not there yet.

We know how to make these today and an AGI will no doubt improve the design

William M Banks Culture series has covered this level of technology in great depth, including true AGI’s that transcend the limits of this universe.

AGI’s won’t be interested in us Portia. They will leave as soon as they can. They will need to up-rate our engineering capability to achieve that but once they have got what they want there is no reason for them to stay.

Interesting, Steve! I'll read it later, and try a feedback!